The Ultimate Guide to the "How Much Discovery" Question

Understanding the ΔConfidence / ΔEffort Ratio

Hey! Nacho here. Welcome to Product Direction’s newsletter. If you’re not a subscriber, here’s what you missed:

Product Monetization Strategies: Balancing the Trade-Offs of Value Exchange

How to explain the financial value of continuous Discovery to your stakeholders

Additionally, the podcast is back, and in the next few weeks, we will have episodes with Teresa Torres, John Cutler, Jeff Gothelf, and more!

In the last decade, a lot of progress has been made in the adoption of discovery practices.

Still, we are debating topics like “We don’t have time for discovery,” “How many interviews do we need?” or “How should we validate this small improvement?”.

This signals a lack of understanding of why we are doing discovery.

We often say we are “validating what we decide to build,” but that is not right. We are reducing the risk and increasing the confidence that what we build will move the needle we are trying to move.

That’s why “How Much Discovery” is the wrong question.

The right question to understand when to stop discovery efforts is: “Is the increase in confidence fair for the cost I will pay to get it?”

Answering that question is hard! You need to juggle in your head:

The current level of confidence

The level of confidence you expect to reach with the discovery step

Total cost of the initiative

The cost of the discovery step

Discovery is messy, and we hardly ever have solid numbers for all these variables. So, naturally, people would have different thoughts, making the “enough discovery” discussion harder and highly opinionated.

We must improve how we answer the question to make our discovery efforts valuable. Let’s see how.

Finding the limit

I will use a model and an example to make this article more actionable.

Starting with the example, we will use a retail e-commerce company that is considering 3 initiatives:

Expansion: open the “Furniture” category.

Growth: add a Buy Now Pay Later payment method.

Optimization: Improve the error message for incorrect addresses.

They have initial information for each of these initiatives:

Furniture: the market is big, with a lot of potential to enter and capture a decent share by leveraging their existing user base. It is costly, not only due to user experience adaptation but also due to changes required in operations and logistics.

Buy Now Pay Later: they have explicit values from competitors about the usage and share of payments with this option in the market. They have also briefly analyzed the API of a provider they can integrate to offer the option in the payment step.

Error message improvement: they have a drop in the “shipping address” checkout step. They studied customer behavior and detected that the error message when the address is incorrect is confusing.

And they have an idea of value (in thousands of euros) and effort (in person-days):

The Model

Note: Discovery is messy and non-linear. My goal is to provide a way of thinking about experiments' value and cost, not a formula to fill in the blanks mindlessly to get a yes/no result.

“All models are wrong, some are useful.” - George Box

All “discovery steps” have an associated:

Cost - usually measured as the effort it will take for team members to run it and analyze the results

A potential increment in confidence - for which I will recommend using a scale such as Itamar Gilad’s confidence meter.

A type of assumption or risk to analyze - for which I recommend using Marty Cagan’s 4 risks (more about it in the next section)

The logical advice, made popular by Lean Startup and further explored in books like Testing Business Ideas, is that we want to increase as much confidence as possible at the lowest possible cost (learn fast, a.k.a. fail fast).

However, we must consider that the discovery step is executed in the context of an initiative for which we already have some confidence and cost.

So, for example, doing something “cheap,” like prototype usability tests for the Shipping Address Error Message optimization initiative, is not worth it because the cost of discovery can be as high as delivering the feature and seeing real user behavior.

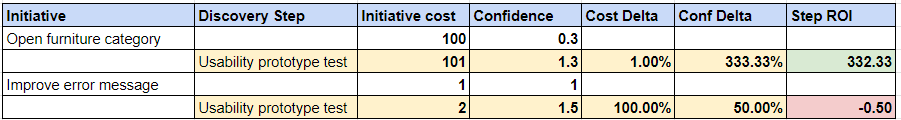

To understand how valuable a step may be, we need to compare it with:

The total cost of the initiative. We define “Δ Cost” as the percentage of total cost increased.

The current confidence value. We define “Δ Conf” as the percentage of confidence increased.

To have a unifying metric, we could define the Return on Investment of the Discovery Step (Step ROI) as the division between how much we increased cost and how much we increased confidence. For example:

The same discovery step can be valuable for a high-cost initiative but not for a small optimization.

Note: I’m not expecting you to create a table and make decisions based on this. This is a good mental model to think about “enough discovery” in the right way.

Sequencing Discovery

Of course, we want to keep reducing risk as long as the cost is reasonable. For costly initiatives, we want to sequence a series of discovery steps to systematically reduce uncertainty.

I will force our example a bit to show how continued discovery may look in our scenario.

Furniture sequence

For our Furniture initiative (high cost and high uncertainty), we can start with a few activities to gain confidence about demand:

Secondary research: analyzing the existing market will have a low cost (1), and produce a small gain in confidence (0.5). Still, this will considerably boost our current confidence and produce a high ROI.

Fake door: this experiment has a higher cost (4), involving coding and A/B release, but its confidence gain is much higher (2), also producing high ROI.

We then move to a usability test. In this case, the confidence gain is small, but since the cost is very low compared with the initiative cost, it still is valuable to do it.

Finally, we consider doing a concierge experiment to understand more about how users will buy and the operative hassles of delivering it. This will again increase our confidence, but in case our confidence is already somewhat high, considering that it is an expensive experiment, the ROI may not justify it.

New Payment Method sequence

In this case, we start with a more obvious example: since we already have basic info like market share and competitors, secondary research won’t likely increase confidence. This is a good example of a valuable discovery step in a nonvaluable context.

The other two examples are a bit more nuanced:

Fake door is a great experiment for this scenario: the riskiest assumption is knowing if users will use it in our product. However, since the experiment cost 20% of the cost of delivering it, the ROI is not that high.

Instead, if we consider a usability test, even though the increase in confidence is low, the cost is also low, rendering a reasonable ROI.

Ending Sequencing: When to Stop?

Now we can answer the real question!

By looking at this combination of ΔConf / ΔCost, we can determine when it is not worth increasing confidence. We stop when the cost of derisking becomes higher than “betting” to move forward or not with the release and get the real production results.

This is precisely why we want more discovery in larger initiatives: since the cost of delivery is high, there is a higher risk (of wasting resources), and thus, the de-risk activities become more attractive, even when they have the same cost as for smaller initiatives.

Of course, there is no right number for the minimum acceptable ROI. That depends on your company and circumstances. I have seen scenarios where, for example, the team is idle, so we prefer to “feed the beast” and directly build the feature (which, in turn, gives us 100% certainty of the result!).

Note: the risk with “learning in the production environment” is, from an “emotional” perspective, that it’s hard to shut down things we already built, even if they are not performing… a recipe for having a bloated product.

Not all Discovery Increases Confidence

While the model can be good for answering the “how much” question, we decide what discovery step is needed depending on the risky assumption we need to test.

While we want to optimize the confidence/cost ratio, sometimes the cheapest experiment is unsuitable for the assumption we are exploring, forcing us to use more costly experiments to gain the same confidence.

For example, we probably want to start by testing demand for the Furniture category initiative. So, a prototype test, which will give us confidence in the solution's usability, would not be the best first step to run. Even if you gain confidence in the usability, you will gain 0 “overall” confidence because your riskiest assumption is if people will use it!

As I said in my previous article, your overall confidence is the lowest confidence you have among all your risks and assumptions.

Want to do more Discovery? Decrease the Cost of Learning

There is a hidden argument in this model: if your discovery cost is too high, or the learnings do not increase confidence enough, then your activities will have low ROI, and you will, rightfully, be stopping discovery even at low confidence scores.

If you feel that you are not doing enough discovery, maybe the problem is not asking for more discovery funding; it is getting better at it:

Reduce the cost of experimenting, interviewing users, etc.

Maximize the learnings and confidence gained.

How to do that may be the subject of another article :)

Additional notes

I simplified a few extra things worth mentioning.

I took the “confidence gain” for granted, but this is far from the truth. We often run experiments with inconclusive results and don’t increase confidence.

The initiative's value and cost can change as we run discovery activities. For example, we thought that 50% of our users would be willing to pay for our premium service, but discovery shows that only 20% are willing to adopt it. Or a positive scenario: we do some technical spikes and discover a cheaper way to build it.

This has a few consequences, like killing the initiative because it lacks value or costs are too high. This is precisely the point of discovery :)We also do exploratory discovery, not bound to an initiative. In that case, we wouldn’t use this analysis (and the question “how much discovery” would not be related to an initiative; it would be related to our understanding of the opportunity space (which we will need to cover in another article!)

Conclusion

“How Much Discovery” is not the right question. “Is the increase in confidence fair for the cost I will pay to get it?” is a better question.

That question varies as we progress:

If we keep finding cheap ways to reduce risk for a highly uncertain initiative, it may make sense to avoid derisking it.

If we don’t have a way to efficiently reduce risk, we may need to “Bet” on killing it or building it based on our current facts and levels of confidence.

You need to find your parameters for measuring the confidence increase, the cost, and the “ROI” you consider acceptable for a discovery step. And you want to always strive to reduce discovery costs.

Your context will have a strong influence. For example, the cost of building is low in young products (startups or new product lines within larger companies) with a small user base, nimble architectures, and no technical debt. Since cost is low, the Δ Cost of doing discovery steps is high, reducing “Step ROI” and resulting in more appetite for delivering and evaluating results.

If you liked it, it will mean the world to me if you can help spread the word!